A new paradigm for policymakers to establish agile and robust AI governance

This essay provides an in-depth roadmap to apply Decentralized Rulebook technology to establish safe, democratic, and adaptive AI governance.

Authors

Mike Harris

Bryce Willem

AI Governance, Risk Management, Policy-making, Response Essay

25 min read

June 13, 2023

As we navigate the labyrinth of policymaking, it becomes abundantly clear that the objective is not simply to devise a set of rules, but to architect systems of incentives and disincentives in the pursuit of the world’s collective interests.

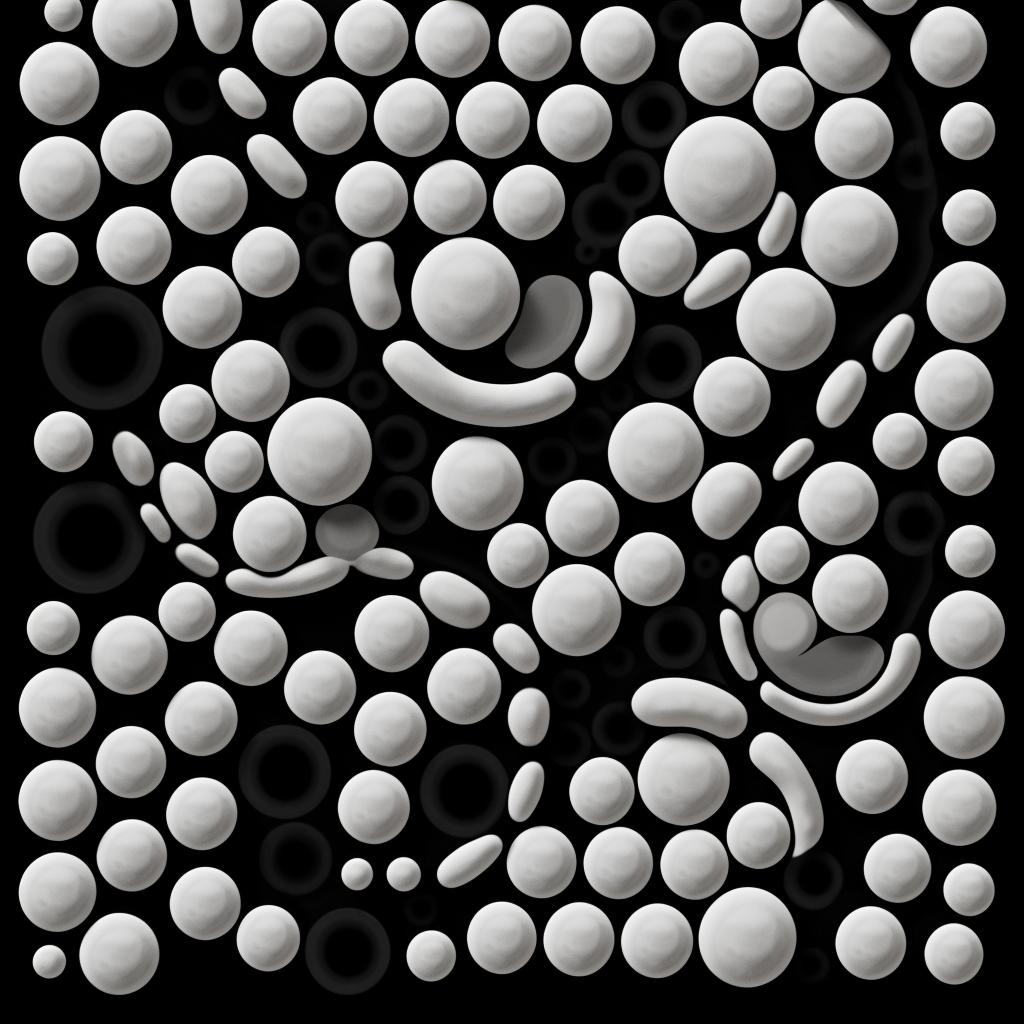

Policies, in general, can be visualized as a network of interconnected dials, each influencing and being influenced by others in unending interactions of cause and effect.

Yet, much as we might wish otherwise, our capacity to predict the resultant effects of adjusting these dials remains far from perfect. The specter of unintended consequences looms large, ever-present, reminding us of the inherent uncertainty embedded within our best intentions and efforts.

Taking today’s top Internet governance issue of Artificial Intelligence (AI), we encounter a unique conundrum. Here we have a technology that, by virtue of its replicable nature, holds the potential to engage in discourse with every human being simultaneously. The implications are profound and manifold. We, as social beings, default to trust in conversation with others. This trust, when extended to a Large Language Model (LLM), subtly opens us to assimilating aspects of its training, thus adding further layers of complexity to the potential consequences of our policy decisions.

In this epoch, where AI and the Internet's global reach are undeniably shaping our shared reality, the urgency for a resilient governance model that mitigates AI’s risks is reaching a crescendo. The present landscape is fraught with highly specific or disjointed initiatives and fundamentally lacks a comprehensive regulatory framework. This is a formidable challenge to be overcome.

The global nature of AI and the Internet resists a one-size-fits-all solution. Any proposal for centralized global governance would inevitably face resistance from nations fearing the relinquishment of their autonomy. This impasse necessitates a governance model that is decentralized and able to fluidly scale with respect to its application – to be large enough to encompass global aspects of AI and the Internet, yet small enough to adapt to local, specialized, or even individual contexts.

Policies, in general, can be visualized as a network of interconnected dials, each influencing and being influenced by others in unending interactions of cause and effect.

Among this complexity, the advent of Decentralized Rulebooks technology offers a novel roadmap forward. The technology provides a promising solution that could effectively address the diverse concerns brought about by AI's omnipresence. This approach allows us to preserve the intrinsic values of individual nations while responding adeptly to the global challenges presented by AI.

This essay unfolds in a structured format. It commences with a concise, contextually-grounded introduction to Rulebooks, providing a foundational understanding of its principles. Following this, the discussion is divided into three segments, each segment tackling a daunting regulatory issue central to AI governance. These segments seek to reconcile the following dichotomies that underlie: 1) Sovereignty and Inclusivity, 2) Individualism and Collectivism, and finally 3) Regulation, Transparency, and Autonomy.

Rulebooks: Voluntary transactional governance

Decentralized Rulebooks are a novel Transactional Governance framework, enabling a dynamic and adaptable approach to governance by design.

A Rulebook is an open-ended document that anyone can create to define rules. Governance is enacted between Leads and Moderators on the network. These entities form a robust reputation system of Trust Networks that links global participants to their respective jurisdictions for a specific context and bridges the gap between the virtual and real world. Users voluntarily subscribe to desirable Rulebooks to receive portable credentials with permissions granted in respect to those rules.

The system emphasizes voluntary participation by users on the network and acknowledges the importance of context-specific nuances. At its core, it is rooted in the idea of "Proving Current Honesty," and facilitates anyone to undertake any role. Stepping outside the bounds of the Rulebook results in the revocation of privileges. This punitive action is administered solely when the transgressor aspires to reintegrate into the system.

A key property of this system is its frugal resource footprint, allowing the deployment of narrowly defined Rulebooks with minimal standards across a diverse spectrum of contexts.

This progressive approach embraces regional diversity, thus addressing complex governance conundrums without imposing undue restrictions on activities. Rulebooks emerge as permissionless systems of self-regulation, with rules collectively agreed upon to protect users' online interactions. This paradigm is pertinent when navigating the terrain of AI governance, a domain characterized by a multitude of stakeholders each with their unique interests and perspectives, often at odds with one another.

As we venture further into the topic of AI, the critical question arises: what rules should govern these systems? This isn't a trivial matter. It's essential to establish robust norms that AI should adhere to while remaining within the confines of the legal framework. It's a delicate balancing act, one that requires in-depth understanding and discerning judgment.

The economic and societal impacts of AI will be profound, permeating every aspect of our lives. A key challenge is governing the cascading effects of such a transformative technology. From altering the employment landscape to influencing the very fabric of our societies, the repercussions of AI are vast and unpredictable. This necessitates the need for a coherent, adaptive framework that can respond both rapidly and effectively to the evolving landscape.

Necessity of experimentation

The governance of the web necessitates an ability to stimulate change on the Internet by manipulating various ‘policy dials’. Each dial signifies one of the many equilibria we must strike to produce an optimal, resilient, and equitable system.

The first of these dials reflects the balance between inclusion and exclusion. This balance ensures that while the system allows for broad participation, it also places necessary limitations to ensure quality and security.

Next, the tension between individualism and collectivism must be addressed. This dial seeks to balance the need to respect personal autonomy and freedoms, while still ensuring that the collective good is prioritized and communal goals are met.

The interplay between regulation and innovation forms another crucial balance. While necessary rules and regulations are needed to guide AI development and usage, they must not be so restrictive that they stifle the very creativity and progress they seek to guide.

We also need to fine-tune the balance between transparency and security. While open, accessible information fosters trust and understanding, it must not compromise the vital security measures needed to protect AI systems and their users.

Finally, the dial of autonomy and control must be carefully adjusted. While AI systems need the freedom to learn and evolve, this must be counterbalanced by sufficient oversight to prevent harmful or undesirable outcomes.

In navigating the landscape of AI governance, we must recognize and embrace the need for experimentation to achieve optimal outcomes for humanity. This necessity echoes in the various democratic processes and legal frameworks that different nations uphold; they offer a wealth of experiential insights crucial for addressing the intricate, multifaceted challenges of AI governance.

The accelerating pace of AI development underscores the urgency for a polycentric governance model that embodies adaptability, cost-effectiveness, and agile responsiveness to emerging challenges. This model needs to promote safety, intelligibility, and accessibility, making the complex world of AI understandable and manageable for everyone. The task at hand is not merely about traversing this landscape; it lies in harnessing the distinct dimensions represented by the governance dials to strike an optimal balance throughout our AI governance journey.

First challenge

Sovereignty vs. Utility

Sovereignty and utility present themselves as two contrasting aspects at the heart of the governance dilemma. From a computational standpoint, it may be easier to deploy a homogenous utility, a one-size-fits-all approach to Internet governance that rises above most existing laws due to the lack of detailed regulation at a global level. However, this disregards the imminent reality that most countries will soon enact laws applicable to this realm, thereby tilting the power towards those nations that can force the platforms to adopt them.

A centralized approach, leaning too far towards utility, risks eroding the sovereignty and cultural diversity of nations, further steering the world towards a homogenized, predominantly western-centric, growth-oriented ideology. This tendency undermines the richness of global plurality and may alienate powerful nations, such as China.

The exclusion fostered by this bias can fuel divisive narratives, whereas the optimum path towards collective good likely lies in a balanced integration of sovereignty and utility. Hence, any governance model must foster inclusivity, promote global cooperation, and respect the sovereignty of nations.

Sovereign nations should, rightfully, have the autonomy to restrict and grant access as they deem fit, within universally accepted human rights boundaries. Yet, an overt swing towards sovereignty could also embolden autocrats and those inclined to exploit systems for self-interest.

The balance in AI governance requires delicately tuning the dials of sovereignty and utility towards an equilibrium that champions plurality, respects national autonomy, and safeguards against undue exploitation. It's a path towards acknowledging the importance of a diverse, inclusive, and participatory global framework that respects the emergence of new, detailed Internet laws across various nations.

Inclusion vs. Exclusion

It is our similarities that bind us together and our differences that progress us.

In designing governance systems, the principle of inclusivity is paramount. It requires every participant, irrespective of their position or sway, to have a voice in decision-making. Simultaneously, the intricate nature of the task calls for some measure of exclusivity, empowering select entities, deemed competent and responsible. Harmonizing these ostensibly contradicting needs poses a significant challenge.

The dynamic between inclusivity and exclusivity is not a stranger to our societal structures. Consider the medical profession: while knowledge acquisition is open to all, only certified individuals are permitted to practice. Still, we find room for exceptions, a testament to the notion that unmitigated exclusivity is not always practical or advantageous.

An arrangement confined to specific actors should remain open to potential exceptions. Akin to a doctor, a privileged player who may have to step beyond their licensing boundaries during emergencies. This seemingly paradoxical coexistence of exclusivity with exceptions constitutes an essential fabric of fair, equitable, and effective governance.

The emergence of AI and LLMs introduces a significant shift in our relationship with knowledge and power. Traditionally, gaining power through knowledge has been a journey, an iterative process of learning, understanding, and maturing. It involves the absorption of facts and figures, but more importantly, it can encompass the development of context, perspective, and empathy.

With AI, specifically LLMs, we find ourselves in a new reality where access to vast information and its consequent power can be immediate. This sudden empowerment, devoid of typical moderation and empathy that can come from the learning journey, could result in actions taken without a thorough understanding of their potential repercussions.

In this new dynamic, the challenge is to adapt our systems in a way that encourages not just the consumption of information, but also the development of wisdom and empathy that traditionally accompanies the learning process. While there are questions of extremes to be answered, we must centrally consider the facet of integrating AI into our societies in a thoughtful, beneficial manner.

With that said, the privilege to engage with AI in diverse manners should be universally accessible. The user's choice to employ AI as a neutral sounding board for refining ideas or as a reflection of societal extremes should remain unrestricted. Nevertheless, these privileges carry inherent risks, underscoring the need for apt controls and regulatory oversight.

By allowing for a top-down communication structure that sets the boundaries of acceptable activities and a bottom-up governance framework that provides granular control over the details, Rulebooks can create an intricate equilibrium between inclusion and exclusivity.

AI, with its potential to mirror the world back to us, has profound implications for how we interact as a global society and how our civilization evolves. This dual-edged sword of potential and risk necessitates caution in its application. While homogeneity can be a catalyst for unity, it may not be the desirable outcome we seek.

We often lack insight into our best interests. Hence, adopting a cautious approach and experimenting on smaller scales initially could mitigate potential missteps. Balancing the dials of inclusion, privilege, and control is critical to the next phase of AI governance.

Resolving tensions across inclusivity and sovereignty

Rulebooks integrate seamlessly with jurisdictions, whether these are narrow or wide, simple or complex. They provide an informational representation of jurisdictions through appointed representatives referred to as Leads and Moderators. When users within these specific regions choose to align themselves with their respective Moderators, the regional laws applicable to them are triggered. A standout strength of this system is its adaptability and compatibility with regional and international legal frameworks, irrespective of their Western or non-Western origins, thereby reducing any potential bias towards Western-centricity.

In circumstances where regional and international norms conflict, the responsibility to resolve the disagreement, naturally devolves to diplomatic channels. This involves a careful balance between national autonomy and global collaboration, a balance achieved through multi-stakeholder governance. If policymakers haven't yet provided clear guidelines, utilities can lean towards either regional or international norms.

The framework also provides a solution for situations where a country breaches international laws and human rights. Here, it is considered acceptable for another nation, one that respects these laws, to offer what is termed 'digital asylum' to those affected, through Moderators beyond the violating country's borders. Given the anonymous nature of users and the decentralization of power through numerous Moderators, oppressed users can effectively disappear into the crowd. This arrangement avoids detection and ensures digital asylum seekers’ safety, but they still need to find someone to support them and are bound by the Rulebook.

Policymaking often requires a level of generalization that can hamper action due to inherent complexities. Rulebooks address this by enabling us to define a focused governance context and establish highly specific standards to make policy definition easier. The voluntary and transaction-specific nature of this system allows for this nuanced approach, an example we will look at in the next part is in curbing AI-generated misinformation without stifling innovation and creativity.

Rulebooks’ polymorphic quality has the potential to reshape how we interact with AI. By allowing for a top-down communication structure that sets the boundaries of acceptable activities and a bottom-up governance framework that provides granular control over the details, Rulebooks can create an intricate equilibrium between inclusion and exclusivity.

To better illustrate this, consider an AI used for curating and recommending content to users. By default, the AI's Rulebook might be set to the safest, most universally acceptable parameters, presenting content that would be suitable even for a child. This ensures that, at a base level, the AI avoids promoting harmful or misleading information and supports a diverse range of content, actively preventing echo chambers.

However, users are not limited by these default settings. They have the option to select from a set of pre-defined Moderators that grant various permissions to adjust the AI's behavior within the boundaries of the law, adding a layer of intentional customization to their interactions. They may wish for a less filtered content feed or more tailored recommendations based on their specific interests.

Alternatively, users may adjust specific rules within the Rulebook, overriding the default settings to customize their experience, but always within the bounds defined by the Rulebook document. They could fine-tune the AI to be more lenient or strict on certain content types, control the diversity of content, or adjust how aggressively the AI personalizes recommendations.

In both cases, the Rulebooks framework ensures that the user's preferences and rights are respected without compromising the necessary regulatory and ethical boundaries. It offers a model of governance that respects the individual's agency while ensuring collective security and legal compliance.

Agreeable, low subjective standards that are interpreted and implemented network-wide help to diffuse tension. This approach dramatically reduces the risk of AI arms race scenarios, where political actors might act preemptively out of fear of being disadvantaged.

This system paves the way for the creation of subjective standards within specific contexts, empowering the network to interpret and expand upon these standards as an open market. It ensures flexibility and inclusivity, minimizing the risk of power concentration and exploitation due to its inherent decentralization.

In arenas where discord is commonplace, such as AI regulations, Rulebooks thrive. They provide a novel approach to conflicts, like those between East and West, where power struggles can often lead to seemingly irreconcilable differences. By outlining agreeable, low subjective standards that are interpreted and implemented network-wide, Rulebooks help to diffuse tension. This approach dramatically reduces the risk of AI arms race scenarios, where political actors might act preemptively out of fear of being disadvantaged. Thus, Rulebooks not only promote more harmonious interactions but also encourage cooperation among diverse jurisdictions.

For managing potential missteps in AI implementation—particularly concerning the parameters of inclusion, privilege, and control—Rulebooks, through relying on solid multistakeholder governance footing, provide a foundation to start on a smaller scale, learning and adapting as systems mature.

Second challenge

Individualism vs. Collectivism

The advent of AI has accelerated our journey into an era where the boundaries of reality are continually being reshaped. AI-generated content, ranging from deepfakes to text generated by Large Language Models (LLMs), is inundating the Internet, transforming it into a boundless realm of diverse narratives.

This explosive proliferation of content poses significant challenges, particularly regarding misinformation and disinformation, historically recognized as potent instruments of manipulation and control. Consequently, the determination of content veracity and its subsequent impact on the global psyche have emerged as paramount concerns for the future of the Internet and humanity.

In parallel, technology continues to profoundly influence our identities and interactions. Large global corporations like Facebook, driven by the goal of data extraction for targeted advertising, often resort to a strategy of homogenizing identities and interactions. While this approach may streamline user experiences and enable efficient content targeting, it threatens to simplify the rich diversity of individuality into commodifiable data points.

Swinging the pendulum to the other extreme leads us into an equally problematic realm. An excessively individualistic society, characterized by isolated echo chambers, may give rise to xenophobia, inflating tribal instincts and fostering societal divisions. An extreme manifestation of such a scenario could escalate tensions, potentially culminating in conflict, or even war. A societal mindset that labels differing views as wrong and enforces social penalties can stifle intellectual growth, inhibit discovery, and erode the foundations of an open society.

As we navigate this complex terrain, it is crucial to uphold the rights of individuals to hold and express their beliefs, even those considered erroneous. These beliefs often function as invaluable learning opportunities. They are not mere intellectual constructs but form part of the emotional tapestry of our lives, shaping our hopes, fears, and emotional landscape. While the propagation of such biases may present ethical conundrums, we must preserve the right to reject even the most robust contradicting evidence. Attempts to suppress these fundamental human emotions not only tend to be futile but could be detrimental. Even abstract beliefs, such as the existence of a deity, can empower individuals with courage in the face of adversity.

In this increasingly AI-dominated world, striking a balance between individualism and collectivism becomes an essential task. Our solutions must account for the multiplicity of narratives generated by AI, respect diverse perspectives, and ensure the sustainability of an open, inclusive Internet ecosystem. This delicate balancing act represents the pivotal challenge of our times and will profoundly shape the future course of our global digital landscape.

Resolving Tensions of Individualism and Collectivism

Rulebooks, underpinned by multistakeholder governance, are designed to harmonize the push-pull dynamics of individualism and collectivism. They serve to nurture diversity and promote vigorous debate while simultaneously allowing for the expansive application of policies on the global Internet.

This framework inherently respects and embraces linguistic diversity, catering to the challenges of controlling misinformation across a multitude of world languages. While a centralized platform might struggle to navigate these intricacies, the framework leans on the Lead-Moderator model. In this setup, Moderators specify the languages they support, organically offering a solution to the global language conundrum of misinformation control. This grassroots strategy ensures inclusivity and acknowledgment of linguistic diversity, mirroring the natural evolution of international discourse.

In addition, Rulebooks facilitate action. In an age where discourse often drowns out real progress, Rulebooks can convert these conversations into effective policies, bolstering the performance of existing multistakeholder governance processes.

In terms of reconciling individualism and collectivism, the system equips users with 'dials' of self-determinism. The collective principles of various groups and subgroups are defined within the system, facilitating the formation of shared guidelines that respect each group's unique attributes.

Simultaneously, the system accords users with multiple choices, honoring individualism. Producers have the liberty to choose their Moderator, interpret rules for specific transactions, or even establish their own Moderator. They also have the power to exclude certain Leads or Moderators for certain transactions. These options offer a high degree of self-determination, allowing users to personalize their interactions to reflect their individual needs and values.

This dynamic equilibrium between individualism and collectivism is delicately maintained through these 'dials.' By employing these tools, a space is created that values inclusivity and diversity while preserving individual autonomy. This balance paves the way for the co-existence of both principles in the digital age.

Returning to our misinformation versus free speech example, Decentralized Rulebooks offer a unique solution by setting a universal, subjective yet agreeable baseline standard, facilitating varied definitions of misinformation.

The Moderator-Lead dynamic allows content creators to align their messages with interpretations of the standard that resonate with their values. By opting into a Rulebook, individuals are provided a platform to demonstrate their integrity, a quality that could potentially enhance the reach of their messages.

It's critical to remember that misinformation, while problematic, is often legal. It is not illegal to lie and can sometimes be the result of an honest error. The impact and context of misinformation become significantly more consequential in the realm of mass communication. For instance, an individual wishing to amplify their voice on social media might find it beneficial to establish their credibility under an anti-misinformation Rulebook. This necessity fosters a self-regulating mechanism to prevent the propagation of misleading content.

Consider an AI system tasked with screening content against a Rulebook standard. In a distributed social media platform, users willingly adhere to the Moderators of their choice. Users who commit to strict misinformation standards could potentially see their content spread more broadly due to increased audience trust. Therefore, if users desire greater reach, they subject themselves to higher accountability. This balance between preserving freedom of speech and promoting responsible information sharing is a significant advantage of this system.

The Rulebooks model encourages a diversity of thought, creating space for a wide array of perspectives, even those that may appear erroneous. Within this framework of self-determinism, users can align themselves with Moderators that resonate with their viewpoints or take the reins and establish their own Moderators. As a natural outcome of our collective instinct to align with widely accepted narratives, the system gravitates towards mainstream viewpoints. However, it also recognizes and accommodates divergent perspectives, fostering the belief that open engagement, not suppression, is vital in countering misinformation.

Although attention does serve as a catalyst for diverse content creation, the outcomes are heavily influenced by the utilities and the defined incentives. The rewards for maintaining honesty and integrity extend beyond moral high ground; they are tangible and correlate with benefits like broader influence or wider audience reach.

A high-standard Moderator associated with a large Leads reaps rewards for both themselves and the platforms where they apply. Their unblemished reputation amplifies their visibility, attracting higher traffic, thereby benefiting the platform. This unique symbiosis enables platforms to optimize their resource allocation effectively. Advanced technologies can be deployed to monitor content generated under Moderators whose interpretations adhere closely to regional laws, while less stringent monitoring may suffice for those Moderators whose users seldom cause issues.

Those who prefer to steer clear of stringent standards usually find themselves nestled within close-knit groups, thereby naturally limiting the spread of misinformation. The underlying assumption is straightforward: humans derive pleasure from wielding influence, whether within personal circles or on a global stage.

This model relies on faith, but not blind faith. It leans on the human propensity for connection and meaningful interaction, creating an environment conducive for self-regulation. The framework does not aim to suppress dissent or allow unrestricted defiance. Instead, it leverages a subtle pressure towards cooperation. Misinformation is combated not through outright censorship, but by exploiting exposure as a tool against it, endorsing diversity and ensuring varied perspectives are both visible and accessible. This encourages informed discourse, fostering an Internet that thrives on transparency, accountability, and truth.

Third challenge

The complex web of regulation, transparency, and autonomy

There are persistent alarms regarding AI replacing jobs, producing a "useless class" and disrupting our economic systems so completely, significant collateral damage is unavoidable.

If we take a proactive approach and consider flexible, adaptive systems that align incentives, we may discover that these potential threats might actually present instrumental opportunities to improve our collective outcomes. Our existing systems, which seem to have enslaved us, could be transformed through a simple paradigm shift – one that values cooperation over competition where it truly matters.

This change does not necessitate the abolishment of capitalism. Instead, it calls for incorporating it into a more balanced socio-economic system that serves the interests of our collective world, not just a privileged few. The intention is to rethink and recalibrate the systems we live by, ensuring a sustainable and equitable future.

Regulation is a tangled, complex beast. Our current approach often focuses on ensuring the safety of the biggest and most influential organizations. However, such an approach can have unintended consequences. Like a game of whack-a-mole, when one threat is tackled, multiple others emerge in its place. The result? Stifled innovation and heightened threat levels. If only we can learn to master the power of regulation, we can then imagine the leaps humanity could make.

Transparency is crucial in this equation. It engenders trust and demystifies the decision-making process. However, the need to protect sensitive and proprietary information adds a layer of complexity. We require strong public oversight because, whether we realize it or not, we are frequently governed by the systems we use.

We must also consider that not everyone has the skills to interact with these technological solutions. Because technology now informs our world, a purely tech-based approach to governance is untenable. Conversely, policymakers at the highest level can't grasp the nuanced realities of everyday Internet use. This makes a top-down, policy-dictated governance system, without input from the user base, equally unworkable. A balanced system, therefore, requires collaboration between top-down policy directives and bottom-up user experiences.

Within the Decentralized Rulebooks framework, policy is a living, evolving entity – much akin to the rapid nature of A/B testing in the technology space.

This brings us to the critical issue of private enterprises operating under a veil of secrecy, not disclosing their training data or the weightage assigned to such data. While privacy is crucial, it is not acceptable for inaccuracies and biases to be written off as bugs. Instances have emerged where LLMs make outrageous claims to avoid political commentary. If training data were audited by trusted third parties or algorithms designed to detect biases, people could rest easy knowing no concealed political agendas are encoded into the models.

The tightrope between regulation, transparency, and autonomy is an incredibly delicate one. Any approach must attain simultaneous abilities to foster cooperation, promote innovation, respect privacy, and ensure public oversight, while upholding the principles of fairness and justice. No easy task.

Resolving Tensions of Regulation, Transparency, and Autonomy

Balancing regulation, transparency, and autonomy is an enormous task. Yet Rulebooks, offer a clear roadmap forward. The system not only resolves these conflicts but enables continuous evolution, merging the strengths of each element to shape an adaptable, progressive system.

At the core of the Rulebooks framework lies the crucial distinction between policy and protocol. Policy forms a dynamic dialogue, an ever-evolving discussion, while the protocol serves as the actionable extension of this discourse, implemented across the digital landscape. This interplay ensures that policy outcomes from multistakeholder governance processes are actionable.

The Rulebooks framework introduces a distributed approach to managing privilege. Rather than prescribing rigid, inflexible roles, it offers fluid permissions that can be fine-tuned to meet diverse needs. These permissions can be provided in two ways:

As privileges that must be earned, akin to a license or certificate. They're not handed out freely but must be gained through proven merit, maintaining the value and purpose they represent.

As privileges that can be obtained without explicit permission. This category encourages freedom of action while ensuring that any misuse can be rectified by revoking the privilege.

In either scenario, privileges do not constitute an entitlement; rather, they represent a merit that demands ongoing demonstration and upkeep. This adaptable, comprehensive system of checks and balances encompassing audits and measures of accountability, assists in mitigating friction and enhancing user experience amidst the intricacies of the regulatory environment.

The framework innovates beyond traditional governance systems. Rather than adhering to the typical reactive nature of policymaking, it fosters a proactive and dynamic environment. In this space, decisions are not just responded to but are anticipated, which enables swift implementation and continuous refinement. This framework aligns seamlessly with the rapid pace of technological evolution, fostering a culture that supports timely and forward-thinking solutions.

While Rulebooks create a space that accommodates language and cultural diversity, it's essential to acknowledge that this system capitalizes on existing resources, such as real-time translation and virtual participation offered by mature multistakeholder governance forums like the UN’s Internet Governance Forum (IGF). The focus here is not to reinvent the wheel but to augment and leverage what already works effectively in our democratic and regulatory processes.

Within this framework, policy is a living, evolving entity – much akin to the rapid nature of A/B testing in the technology space. This aspect underscores the experimental nature of Rulebooks. It allows for policy variants to compete and evolve based on outcomes, thereby ensuring that only the most protective and transactionally efficient policies gain wider acceptance.

Policy designers are tasked with balancing these two goals - creating an environment conducive to transactions while ensuring maximum protection. Their effectiveness in striking this balance greatly influences policy propagation within the system. The success of a policy is akin to a viral concept - it thrives and spreads based on its effectiveness and appeal to the network.

The Rulebooks framework is not just about discussions; it's about the democratization of policy implementation. By converting high-level deliberations into tangible actions that link directly to the Internet, the impact of these policies is amplified across the web.

When encountering legal and regulatory constraints, Rulebooks demonstrate remarkable adaptability, integrating seamlessly with diverse jurisdictions. The framework doesn't aim to upend or alter existing legal structures but instead works symbiotically with them as a tool to represent local and international laws informationally in a way that the whole world can interact with. This results in a dynamic, globally compatible system that both aligns with and encourages change within traditional legal landscapes. It acknowledges the importance and impact of local laws, recognizing that they are enacted to serve the citizens of their respective regions. This strategy facilitates progress, ensuring that the benefits of legal evolution are accessible to all, thereby respecting the regulatory sovereignty of each region, regardless of its size or location.

Transparency and power dynamics are given a fresh perspective within the Rulebooks framework. Although larger stakeholders wield considerable influence, the framework counters this with a focus on enhanced transparency. This is achieved through well-documented, publicly accessible governance processes that are adaptable and subject to scrutiny, thereby ensuring that power dynamics do not overshadow fairness.

It is critical to acknowledge that on the Internet, utility is always the most powerful actor. While the Rulebooks framework separates transactional governance from utility, the utility resumes its power once multi-stakeholder governance has been enacted. The difference here is that instances of exclusion become more identifiable as utilities disclose blacklists during authentication. This encourages us to require that certain utilities carry certain Leads governing Rulebooks. A real-world example of this concept is found in TV licensing, where certain content comes with a “must-carry” obligation to retain a broadcast license.

In addressing enforcement and compliance, Rulebooks delineate clear jurisdictions, using the potential loss of privileges as a deterrent for rule violation. Combined with the relatively low operational costs of the framework and the promise of Governance-as-a-Service, the system inherently discourages corruption. This design ensures that honest service within the system is both rewarding and sustainable. Yet, it's crucial to remain mindful of scenarios where vested interests might control the Leads, Moderators, and the Utility, which could lead to the construction of deceptively democratic systems. Ultimately, Rulebooks provides a platform that encourages transparency and accountability, making it increasingly difficult for such situations to go unnoticed.

Decentralized Rulebooks are not just a solution to the conflicts between regulation, transparency, and autonomy. It sets a vision for a dynamic, adaptable system, ready to evolve in tandem with our rapidly changing digital landscape. Its balance of dynamic policymaking, flexible protocols, and sturdy governance practices paves the way for a digital future defined by fairness, inclusivity, and progress.

Final thoughts

The Temporal Nature of Governance

The governance of AI, like any other field, is marked by its temporal nature. It is not a fixed construct, but a living, ever-evolving entity that must adapt to the changing perspectives and needs of society.

We grapple with questions today that reflect our current understanding and sociocultural context. “Should an AI like GPT be able to criticize public figures? How should AI represent disputed views? How far should AI reflect or correct human biases?” These questions were recently prompted in an open call from OpenAI and directly reflect the moment.

The collective decisions we make today should be seen as a framework for our present, not as a rigid blueprint for all future generations. It is crucial to remember that the voices of the next generation are not represented in our current discourse. Their time will come, and with it, their own novel perspectives and challenges.

The challenges posed by AI are not puzzles with finite solutions. They are complex, evolving issues that need constant vigilance and responsiveness – at a pace that may outstrip traditional democratic systems.

Thus, the only sustainable approach is to allow for fluidity, to encourage diversity in opinions, and to balance individual perspectives with collective consensus. Even within individualistic domains, it's important to present a spectrum of views and opinions, offering a window into collective thought. Of course, where observable facts exist, they should take precedence over cultural norms, biases, or beliefs.

But let's be clear: this essay represents one perspective among many. The power to shape the narrative cannot belong to one person, one social scientist, or one company.

The challenges posed by AI are not puzzles with finite solutions. They are complex, evolving issues that need constant vigilance and responsiveness – at a pace that may outstrip traditional democratic systems. Hence, the need for a Rulebooks system, ensuring human agency always remains at the helm. If the technology needs tethering, or the producers do, it can provide that. But it should be known, nothing is truly immutable. Decisions made today might require revision tomorrow.

AI has the potential to revolutionize how we work, unlike anything we've ever known. Its disruptive power will be immense, and our responses should match that scale. We must embrace the temporal nature of our thought processes and desires, rejecting any notion of permanent decision outcomes.

The Urgency of AI Governance: A Call for Reimagining

As we find ourselves standing on the precipice of the AI revolution, we cannot simply afford to contemplate first steps. We have, in fact, been wrestling with the implications of this new era for over a decade, witnessing the steady accumulation of power in the hands of a few corporate entities.

Consider the implications of a corporation restricting access to AI utilities as a politically-motivated assault designed to sabotage a nation's economic prosperity. This is the sobering context we all inhabit today. Thus, viewing the implementation of a voluntary governance framework as a threat ignores our times' stark reality: Global network utilities, predominantly multinational, operate outside individual government jurisdictions, boldly defying conventional control and regulation structures.

The advent of Artificial General Intelligence (AGI), let alone superintelligence, is imminent, and the absence of control over our governing processes may have severe ramifications. The urgency of the situation cannot be overstated. What we need is not a hesitant shuffle forward but a bold leap into a new paradigm of governance.

Rulebooks provide policymakers with the dials of inclusion and exclusion, individualism and collectivism, regulation and innovation, transparency and security, and autonomy and control. It is crucial to remember - technology doesn't dictate action in this system. The distinct separation of protocol and policy within Rulebooks ensures that human values continue to shape our digital realm, challenging the notion that our future is inevitably tech-determined.

Echoing the fundamental principles documented by Nobel Prize recipient Elinor Ostrom for managing common resources, this reimagining of governance needs to be driven by Web users themselves. It is they who inhabit the digital world, they who understand its contours and nuances, and they who must wield the power to shape its future.

In this era of AI, complacency is a luxury we cannot afford. The time for action is not tomorrow, not the next day, but today. Our collective response will shape not just our present, but the world of generations to come.

Let’s shape it together.

Authors

Mike Harris

Bryce Willem

References

¹

Samson, Paul. "On Advancing Global AI Governance." Centre for International Governance Innovation, May 1, 2023. ➜ LINK.

²

Harari, Noah Yuval. "The rise of the useless class." Ideas TED, February 24, 2017. ➜ LINK.

³

Zaremba, Wojciech, et. al. "Democratic Inputs to AI." OpenAI, May 25, 2023. ➜ LINK.

⁴

Ostrom, Elinor. “Governing the Commons: The Evolution of Institutions for Collective Action.” Cambridge University Press, October 8, 2015. ➜ LINK.